How does ChatGPT work? A brief history of computational language understanding

Unless you’ve been living under a rock with no internet access, you’ve no-doubt heard examples of how people are using the platform, and prophecies of how ChatGPT is set to change the course of society as we know it. When something this interesting shows up, surely curious minds want to know how it works. Despite having worked on Machine Learning for over a decade and having an active interest in the space, when I tried to read the research papers initially, I found it to be a daunting task. Each paper that I tried to understand referred to concepts that I did not understand, and when I tried to read another paper for those concepts, I found another set of concepts that I did not understand. It took a substantial resolve to keep following the rabbit holes till I understood the papers that describe how ChatGPT and similar technologies work from first principles.

This article is my attempt at simplifying the timeline of technical advancements that led to ChatGPT as we know it today. If you understand basic computer science and probability, you should have a strong enough foundation to follow along. Without getting into any complex math or very technical discussion, you should get some sense of how these things are wired.

Keep in mind, no one really understands how these models are doing what they are doing, in the same way, that no one really knows how they learn to ride a bike or walk. But that doesn’t mean we don’t have some handle on what's going on and we certainly know how we arrived here.

In this four-part series we’ll cover:

A brief history of computational language understanding, starting from the early collaboration of linguists and computer scientists (This article)

Transformer architecture – The engine behind ChatGPT, including a brief study of sequence-to-sequence models starting from RNNs ending at Transformers.

Emerging properties of ChatGPT and other large language models (LLMs), where we’ll explore how language models are built using very large Transformer models that have surprising properties.

The future of AI – trends to watch for in a ChatGPT world, including applications, future research, and implications for our world.

There is a lot to unpack, so let’s get started!

What is ChatGPT and how does it work

GPT stands for Generative Pre-trained Transformer. The chat prefix to GPT was added because of the chat interface added to the GPT models. ChatGPT is an AI language model developed by OpenAI that can generate a natural language response to human input—basically, it’s an advanced chatbot.

To get started, we must first examine the two interwoven threads of computational language understanding and machine learning using neural networks. Both are fairly deep and technical topics, but my hope here is that at the expense of some accuracy, we can simplify the concepts enough that anyone with basic computer science knowledge can get an understanding.

Early natural language processing (NLP)

Computer scientists and linguists have been collaborating for at least half a century in an attempt to make computer programs understand language. Most people in the field believed that the path to doing so involved creating taxonomies of words, breaking sentences into parse trees, assigning parts of speech tags, and then using templates and rules to derive meaning. But as early as the 1950s people realized that there was a lot of information in looking at language purely from a statistical standpoint. For example, a word’s significance to the documents it appears in is directly proportional to the number of times it appears in the document and inversely proportional to how frequently it occurs in other documents. This was observed in 1957 and some version of this idea (TF-IDF) is still used in most search engines today. If someone searches for “the indic languages” the most significant word to match would be indic, followed by languages followed by the because indic is the rarest word in that phrase.

Language Models

Quickly fill in the blanks without thinking too much: The dog [blank] so loudly that it was painful. If you are like most people, you guessed barked and not scratched. If you were to assign a probability to every possible word, you would probably give a fairly high probability to barked and a very low probability to anything else. This process of computing probabilities is called Language Modeling. ChatGPT is essentially a language model. It is also a very large neural network with 175 billion programmable connections which is why it is called a Large Language Model (LLM). How large is large? For comparison, the human brain is estimated to have 10^15 or 1000 trillion connections.

The fun thing about language models is that you don’t have to stop after one prediction. You can append the predicted word to your text and then make the next prediction and make the next prediction and so on until you have a full page of text. You can see this happen in real time if you have predictive text turned on for your phone keyboard. Most phone keyboards give you a ranked suggestion for the next word. If you keep accepting it, usually you have a bizarre but interesting piece of text created entirely by the phone’s language model. These models are nowhere near the sophistication of ChatGPT.

The simplest language model would just count pairs of words that occur next to each other in a large body of text and then given the first word, look at the histogram of the next word. This is essentially where language models started. For example, after the word “phishing”, the most likely words may be “email” or “attack”. If you look at Google’s auto-completions, it matches that roughly intuition.

N-grams

Next comes the idea of counting not just pairs of words (bigrams) but triplets (trigrams) and sequences of four and five and so on. These collectively were called N-grams. As the length of a sequence goes up, the number of possibilities goes up exponentially. So all n-grams cannot be stored and used in language modeling. Fortunately, as the length of a sequence goes up the number of times you find a sequence in a body of text also goes down so if you keep a threshold on counts to be above statistically significant occurrences, you can build it. Once you have a collection of N-grams, a simplistic way to predict the next word would be to find the longest matching sequences for the current text and use the distribution of the next word to predict the probabilities. This was more or less the state of art for language modeling for the longest time.

In fact, when I asked a friend at Google Translate in the late 2000s about how it worked, I was really surprised to learn that the core of it was simply matching n-grams in one language to n-grams in another language without much regard for grammar rules or anything else.

Representing concepts as big vectors

The N-gram model’s biggest drawback is that it has no information about the meaning of the word. In my opinion, representing words as big vectors was one of the biggest advancements in the early days of statistical NLP. Before vectors, people thought that the way to figure out synonymy and related words was to put words in a large tree (ontology) and then find how close they are to each other. In fact, there were armies of linguists whose job was to put words into ontologies. The problem with these word trees is that you’re forcing a somewhat arbitrary structure. For example, in a given ontology, Albert Einstein may show under People->Scientists->Physicists->Born in the 1800s, while the theory of relativity may show under Abstract Concepts->Scientific Concepts->Physics->Modern Physics. From this viewpoint, the two seem very far apart, yet they are clearly related concepts.

Imagine another way to organize words, where you are given a very large whiteboard and each word represents a fixed-size circle with a center at a specific point on this 2D plane. And words are arranged on the whiteboard so that words that are related to each other are close to each other and words that are less related are far apart.

You may find organizing the words in this way near impossible because if these circles are packed together, each circle can have only a small number of (six to be precise) close neighbors, but a word can have many different neighbors that are all slightly different in meaning. Now imagine instead of a 2D plane, we are in 3D and words are represented by spheres. This gives you a lot more room to pack related concepts together. This in fact goes up exponentially with the number of dimensions. So by the time you reach 256 or more dimensions, this becomes an excellent way to represent the concepts behind words so that related concepts are close together and unrelated concepts are far. What this means is that each word can be represented by a few hundred real numbers that represent the coordinates of the center of its sphere. These vectors of numbers of often called word embeddings.

This idea is so important that we are going to try and look at it another way. Suppose you limit yourself to the ten thousand most frequent words in the English language and everything else gets ignored in the following analysis. Also, imagine someone coming up with a thousand topics. And each word is scored on a scale of zero to one whether it belongs to that topic. For example, “mango” may belong to the topic “fruit” with a strength of 1.0, but the topic of “yellow objects” with a strength of 0.5 and “abstract concept” with a strength of 0.0. In this way, each word can be represented by a vector of thousand numbers. Now if we wanted to see if two words are related all we need to do is see how far apart these vectors are. Of course, if the topics were not chosen well, this scheme will perform poorly. So all you have to do is come up with a thousand topics that would do a good job of capturing the essence of all the ten thousand words, and then come up with 1,000 x 10,000 = 10 million weights. This kind of work has been done manually. It takes a lot of effort and the results are usually mediocre.

Latent semantic analysis (LSA)

One of the first successful attempts at solving this problem in an algorithmic way was the idea of Latent Semantic Analysis published in 1988. The idea was that if you add up the vectors of the words in the document, it should do a good enough job of representing the topic of the document. Now, based on the vector representing the document, if you guess the word distribution in the document, you may not get the exact word distribution but you might get something close as long as we had done a good job of picking the topics and association of words to topics.

For a given document, we could measure the difference in the original distribution of words and the re-constructed distribution of words from the topic vectors. Let’s call this difference the error in reconstruction. Now, if we have a large collection of documents, we could add up the error across all the documents. The key intuition of this paper was that the problem of picking topics and their connections to words could be considered a mathematical optimization problem that tries to reduce the total error.

Furthermore, if you constrain the function taking you from words to topics and topics to words to be linear, the process of finding the best topics and best vectors for words so that error is minimized is identical to another mathematical problem called Principle Component Analysis (PCA). Luckily PCA has a closed-form solution that can be computed efficiently.

The vectors computed in this way were fairly useful in finding the conceptual overlap between words and very useful for things like web searches. One disadvantage of computing vectors this way was that it ignored coherent concepts, instead reducing language to arbitrary mathematical optimization. Sometimes you could see the hidden meaning behind the numbers in the vector, and sometimes you couldn’t. This was our first clue that advanced mathematical modeling of language would be less and less explainable.

Significance of vector representation of words

Word embeddings from the very start were a powerful tool for measuring similarities in the meaning of two words. As the technique for computing these embeddings improved, it turned out that you could do arithmetic with the meaning of the words in surprising ways. For example, if you take the embedding of king, subtract the embedding of man, and then add the embedding of woman, you get something very close to the embedding for queen. What this means is that you can mathematically manipulate concepts. This is a big deal. So far the only way you could solve this problem was through rules and the application of logic – a brittle way of encapsulating real-life complexities.

With these embeddings, you can map and translate concepts from one language to another, produce summaries, and mix the meaning of words to get another word. For example, jaguar + animal will represent a different concept than jaguar + car. Or you can ask what word represents sad + lonely. Put into practice, this gives models the ability to suggest whether an email is significant or spam, perform sentiment analysis of an online review, and most importantly run semantic search.

Going beyond linear functions

The LSA approach above artificially constrained the functions going from word to topic to be linear for mathematical and computational convenience. The only question was, are there better embedding functions to be found if we relax these constraints so that we can discover better representations of concepts for the various applications listed above?

While multi-layer neural networks were always a very promising class of functions for representing arbitrarily complex functions, they were notoriously difficult to train and for the most part, had fallen out of favor. However, a few research groups strongly believed in its power and kept trying different techniques to make them do useful things for decades. After many different iterations, Geoff Hinton’s group and Yeshua Bengio’s group successfully demonstrated that neural networks could do a great job of learning representations of words and concepts as embeddings. Along with Yann LeCun’s work on Convolution Neural Networks (CNN), this work is considered pivotal in the resurgence of neural networks in their current form as the most powerful tool in machine learning.

AlexNet and GPUs

2012 was another pivotal year for the resurgence of neural networks. Most experts credited it to AlexNet, which used GPUs to train a large CNN for computer vision tasks with a substantially better output than anything else in the field. Using GPUs was already floating around for a while, but the magnitude of AlexNet’s success opened the door to many others who were training larger and larger neural networks with bigger training sets and more and more compute. One big realization for researchers at this time was that the more data and compute you were willing to give your neural network the better the results. This was not the case with most other machine learning methods. Most other approaches saturated after a while, but the neural nets kept going. The two fields that benefited the most from this approach were Computer Vision and NLP. From there, innovation became very rapid and it hasn’t stopped since.

Adding context to words

One problem with trying to map words to embeddings was determining context. Words can have very different meanings depending on how they’re used. For example, the word bank in either bank balance or river bank has two completely two different meanings and therefore must have two different embeddings.

From 2013 to 2018 a series of research papers improved the quality of embeddings by accounting for other words in the context and using different model architectures. The most influential papers in this period were Word2Vec (2013), Glove (2014), and Elmo (2018) which tried different techniques for capturing the meaning of a word in its context. The techniques behind these results are totally worth studying but are not that relevant to our purpose of understanding ChatGPT. However, the idea of understanding the context itself is very important to understanding ChatGPT.

Machine translation

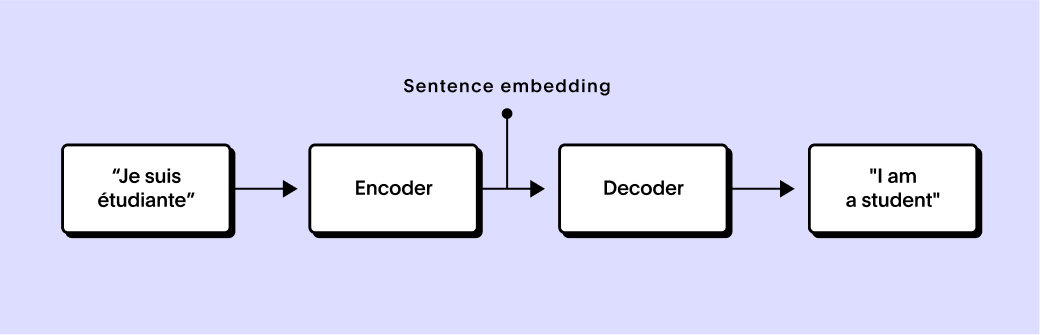

One particular problem that was fertile ground for applying various neural network advancements to was the problem of translating text from one language to another. The key idea here was computing sentence embeddings that map a full sentence to a vector and capture all the meaning in the word. This vector can then be passed to another network and translated into text in a different language. In that sense, you have an encoder network that takes the sentence and encodes it into an embedding and there is a decoder network that can decode it. Now this combined network can be trained on all the available training data for any language pair where we have human-generated translations.

Even early attempts at using neural networks looked quite promising compared to classical techniques, but it also provided the grounds for a lot of innovation that followed.

How ChatGPT is able to understand human conversation

So far, we saw how embedding words or sentences into a high-dimensional space is useful for many NLP tasks. We also saw that interpreting words or sentences within the context in which they occur is very important, and also challenging. In the next article, we will look at various sequence-to-sequence models starting from RNNs building toward the Transformer Architecture which was the key innovation that made ChatGPT possible.

Next Post

Transformer architecture – The engine behind ChatGPT, includes a brief study of sequence-to-sequence models starting from RNNs and ending at Transformers.

Very clear, concise and understandable. Thanks for writing this. Good stuff!

Interesting writing Amit, It took close to one hour to understand this ,waiting for next part … few pictorial representation and more examples(‘Mango’) will help people like us